We live in an age of social-media mayhem.

Brad Parscale, the man who will be campaign manager for Donald Trump’s 2020 re-election effort, knows all about the mayhem. Less well-known than such former Trump inner-circle figures as Steve Bannon and Corey Lewandowski, Parscale was digital director in the 2016 Trump campaign.

This is Parscale explaining one strategy: “I asked them, I told Facebook, I wanna spend $100-million on your platform, so send me a manual. They said, ‘We don’t have a manual,’ so I said, ‘Well then send me a human manual.’ " They duly did, and Parscale was told how to find and micro-target responsive audiences. Which he did, successfully, with Facebook’s help.

Parscale’s anecdote comes in the sobering, gripping and deeply unsettling The Facebook Dilemma (Tuesday, PBS, 10 p.m. ET on Frontline). It might more accurately be called “How Dangerous Is Facebook?” and allow the audience to decide on what degree of foul dishonesty or brazen ineptitude can be attached to the social-media platform.

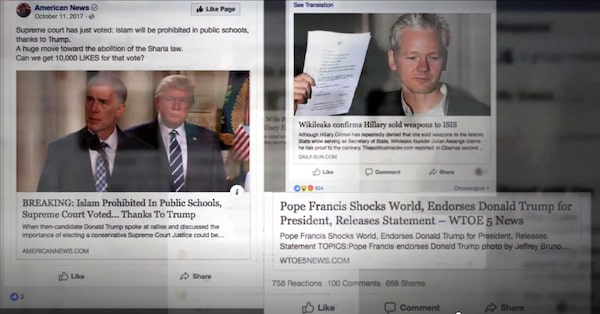

The fire of 'fake news' spread through Facebook during the U.S. election of 2016.PBS Frontline screengrab

The Frontline investigation is a serious-minded, two-part, four-hour examination of Facebook’s rise and influence. What airs Tuesday, after the first part’s look at founder Mark Zuckerberg and the start of his company, tackles the guts of the main issue. It opens with the background voices of Hillary Clinton and Donald Trump accepting their party’s nominations. Then it shows Zuckerberg, live-streaming from his backyard, while smoking brisket and chatting idly about getting ready to watch the first presidential debate.

He looks for all the world like a lightweight, a nincompoop gee-whizzing about this cool tool he created for chatting with people. It is, as we know now, a dangerously disingenuous pose. This man has shaped the contemporary world as much as presidents, prime ministers, kings, queens and mighty armies.

Made by veteran 60 Minutes and Frontline producer James Jacoby, the program does not have a shocking exposé climax. What it has is a relentlessly revealing chronicle of how Facebook was weaponized to sow doubt and division and tell lies for political and more sinister purposes. The interviews with current and former staff members cause the viewer to cast doubt on Facebook’s late-arriving claims that it truly cares about getting used in this way.

The gist of the program is that Facebook does not take responsibility for the impact of what it spreads. It doesn’t act until forced to do so, and by then, it’s often too late and it doesn’t do very much anyway. Its news-feed stratagem made it the most powerful news platform in the world, but it took no responsibility for what is spread. Now it makes tut-tut noises.

At the core of the matter is one key discovery about social media that’s not new any more: Fear and anger create “greater engagement” online and therefore more advertising value. The integrity of what stokes the fear and anger is inconsequential as far as Facebook is concerned.

We are all aware at this point of the fire of “fake news” spread through Facebook during the U.S. election of 2016. “Pope endorses Trump," and other outright untruths. Zuckerberg went on the record to claim that the idea of such untruths influencing the election was “a pretty crazy idea.”

What is less newsworthy to the casual user of Facebook is the platform’s tremendous influence in the Philippines and Myanmar. In the Philippines, some 97 per cent of internet users are on Facebook and use it as a news source. The program suggests that President Rodrigo Duterte’s backers were using fake accounts and fake followers to distort a news narrative in order to make his policies appear more popular. Facebook executives were warned, but did little or nothing for two years.

In Myanmar, rights groups and the United Nations say Facebook was used to disseminate inflammatory, anti-Muslim speech aimed at the Rohingya Muslim minority, and the result is described as ethnic cleansing and genocide. Again, Facebook had early warnings but did little or nothing. Here’s the thing: Facebook knows that hyperpartisanship creates greater engagement.

Multiple Facebook executives are interviewed by Frontline. Watching and listening is a soul-destroying experience. Clearly agreed upon by the corporation is the lame admission, “we were too slow to act,” which is said often. What the viewer twigs to, soon, is that we are hearing the gibberish of a high-tech company’s reverse-spin – Facebook is the solution, not the problem. It exists to “amplify the good and minimize the bad.”

It is particularly galling to hear from Naomi Gleit, “vice-president of social good and senior director of the Growth, Engagement and Mobile Team,” a woman who is Facebook’s longest-serving employee after Zuckerberg. Cagey but smiling and trying hard to sound concerned, she’s the one talking about “minimizing bad experiences” on Facebook. She might as well say, “Let them eat cake.” The disconnect is that disturbing. Mayhem, what mayhem?